Navigating the AI Transformation Tightrope: Balancing Innovation, Regulation, and Human Capital

The artificial intelligence landscape in late 2025 presents a complex picture of immense opportunity tempered by significant operational challenges....

7 min read

Peter Vogel

:

September 15, 2025

Peter Vogel

:

September 15, 2025

The AI landscape continues to evolve at a breakneck speed, demanding a strategic and considered approach from businesses of all sizes. Emerging patterns signal a shift from simple AI adoption to a more nuanced understanding of ethical considerations, practical automation solutions, and the growing need for control and transparency.

This week, we examine critical developments shaping AI adoption, focusing on ethical content creation, the maturing market for AI coding assistants, the balance between automation and human touch in customer service, data security and compliance, and the rise of steerable AI. We’ll distill key AI news into actionable insights for decision-makers, addressing pressing questions around these topics.

This article is tailored for Operations/Technology Executives seeking operational efficiency and digital transformation, Marketing Leaders looking to enhance marketing capabilities through AI, Growth-Focused CEOs aiming for competitive advantage and scalability, Sales Directors interested in improving conversion rates and team productivity, and Customer Service Leaders looking to enhance service while maintaining quality. We aim to equip you with the knowledge to navigate this dynamic landscape effectively and responsibly.

The increasing reliance on AI for content generation raises ethical concerns around authenticity, transparency, and the potential for misinformation. As AI becomes more sophisticated, the line between human-created and AI-generated content blurs, potentially eroding consumer trust.

There is a growing awareness of AI-generated content and its potential to erode trust in brands. The rise of the authentic AI movement promotes transparency in AI-assisted content creation, advocating for clear disclosures when AI is used to generate marketing copy, social media posts, or customer service responses. Several European jurisdictions have already introduced preliminary guidelines requiring disclosure of AI assistance in professional communications, particularly for content related to financial advice, healthcare, and legal services. Research indicates that transparency about AI usage actually increases trust in personal brands, contrary to initial industry concerns.

Organisations need to establish clear ethical guidelines for AI content creation, including transparency policies and mechanisms for human oversight. This involves training content teams on ethical AI usage and implementing tools that can detect and flag AI-generated content. Balancing the efficiency gains of AI with the need to maintain brand voice and authenticity is a key challenge.

AI coding assistants are rapidly transforming software development, offering significant productivity gains and enabling non-technical users to contribute to development projects. As AI models become more sophisticated, they can automate routine coding tasks, generate code snippets, and even assist with debugging.

The continued improvement of AI coding models, such as OpenAI's Codeex, is driving adoption, with OpenAI’s Codeex platform having seen a 10x usage spike in two weeks, and renewed praise for its reliability from industry experts like Sam Altman, Yan Pellég, and Peter Levels. Brian Armstrong, CEO of Coinbase, reported that 40% of their code is AI-generated, highlighting the growing role of AI in software development. The rise of open-source and low-code/no-code AI coding assistants is making these technologies accessible to a wider range of users. Investor enthusiasm remains high, with significant funding being poured into AI-powered development platforms; for example, Cognition, creators of the AI coding agent Devon, raised $400M at a $10.2B valuation, with Annual Recurring Revenue (ARR) jumping from $1M to $73M in less than a year.

Organisations need to evaluate and integrate AI coding assistants into their workflows strategically, focusing on code quality, security, and developer training. This involves establishing clear guidelines for AI usage, implementing robust code review processes, and providing developers with the necessary skills to leverage these tools effectively.

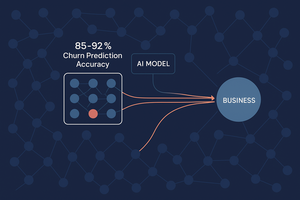

AI-powered chatbots and virtual assistants are becoming increasingly prevalent in customer service, offering 24/7 support and improving response times. As AI technology advances, these systems can handle a wider range of customer inquiries, freeing up human agents to focus on more complex or sensitive issues.

The increasing sophistication of natural language understanding (NLU) and sentiment analysis capabilities enables chatbots to understand customer needs and emotions more accurately. However, there are significant concerns about job displacement, with Salesforce CEO Marc Benioff confirming that AI is already replacing thousands of support roles, stating he needs less heads after cutting headcount from 9,000 to 5,000. This underscores the urgent need for reskilling customer service agents.

Organisations need to carefully design their AI customer service solutions to ensure a seamless and positive customer experience. This includes providing clear escalation paths to human agents, personalising automated interactions, and ensuring data privacy and security.

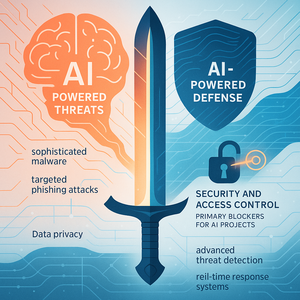

AI implementation requires access to vast amounts of data, raising significant concerns around data security, privacy, and compliance with regulations like GDPR and CCPA. As AI models become more data-hungry, organisations must ensure that they are collecting, storing, and using data responsibly and ethically.

Growing legal scrutiny of AI data practices is forcing organisations to re-evaluate their data governance frameworks. For example, Meta has faced increasing legal constraints around datasets, with publishing decisions sometimes requiring approval from Mark Zuckerberg due to legal risks. This litigious environment is evident in recent events, such as Anthropic's $1.5 billion settlement for using pirated training data, setting a significant legal precedent for copyright infringement in AI. The increased focus on data provenance and lineage is driving demand for tools that can track the origins and usage of data throughout the AI lifecycle. The emergence of new data security and privacy technologies, such as federated learning and differential privacy, offers potential solutions for protecting sensitive information.

Organisations need to implement robust data governance frameworks to ensure compliance and protect sensitive information. This includes implementing data encryption, access controls, and audit trails, as well as establishing clear policies for data retention and disposal.

Concerns around the potential for AI models to exhibit unpredictable or harmful behaviour have led to increased interest in steerable AI – models that can be controlled and guided more effectively. As AI models become more powerful, it is essential to ensure that they align with human values and can be steered away from unintended consequences.

The development of new AI architectures that prioritise steerability and transparency is gaining momentum. The application of techniques like reinforcement learning to align model behaviour with human values is showing promising results, as seen in OpenAI's strategic shift to exploring reinforcement learning as an additional path for progress. The positive reception of controlled AI models by the AI safety community, particularly the design of Cicero (an AI developed to play Diplomacy), indicates a growing consensus on the importance of steerability. Cicero's integration of a reasoning system to guide its language model, conditioned on specific actions, demonstrated that systems can be interpretable and steerable.

Organisations need to prioritise the development and deployment of steerable AI models to ensure responsible and ethical AI adoption. This involves investing in research and development of new AI architectures, as well as establishing clear guidelines for model behaviour and monitoring.

The artificial intelligence landscape in late 2025 presents a complex picture of immense opportunity tempered by significant operational challenges....

The discourse surrounding artificial intelligence is maturing. Across boardrooms and operational teams, the conversation has decisively shifted from...

The artificial intelligence landscape is no longer a distant frontier; it's rapidly reshaping the present, demanding a strategic response from...